AWS History and Timeline regarding Amazon EventBridge - Overview, Functions, Features, Summary of Updates, and Introduction

First Published:

Last Updated:

This time, I have created a historical timeline for Amazon EventBridge (formerly Amazon CloudWatch Events), which performs event detection for AWS services, event linkage to other AWS services depending on conditions, and event generation.

Just like before, I am summarizing the main features while following the birth of Amazon EventBridge and tracking its feature additions and updates as a "Current Overview, Functions, Features of Amazon EventBridge".

I hope these will provide clues as to what has remained the same and what has changed, in addition to the features and concepts of each AWS service.

Background and Method of Creating Amazon EventBridge Historical Timeline

The reason for creating a historical timeline for Amazon EventBridge this time is that its predecessor, Amazon CloudWatch Events, which debuted in 2016, has made it easier to implement various automation triggers using AWS services, such as event-driven or schedule-driven events, and event generation, depending on conditions. Because of this, I wanted to organize the information about Amazon EventBridge with the following approach.- Tracking the history of Amazon EventBridge and organizing the transition of updates

- Summarizing the feature list and characteristics of Amazon EventBridge

- What's New with AWS?

- AWS News Blog

- Document History - Amazon EventBridge

- Document History - Amazon CloudWatch Events

The content posted is limited to major features related to the current Amazon EventBridge and necessary for the feature list and overview description.

In other words, please note that the items on this timeline are not all updates to Amazon EventBridge features, but are representative updates that I have picked out.

Amazon EventBridge Historical Timeline (Updates from January 14, 2016)

Now, onto the timeline for Amazon EventBridge's features. The history of Amazon EventBridge, combined with its predecessor Amazon CloudWatch Events, spans about six years at the time of writing this article.While Amazon EventBridge supports many events and event rule targets, please note that not all are listed here, only a representative subset.

* You can sort the table by clicking on the column name.

| Date | Summary |

|---|---|

| 2016-01-14 | The predecessor, Amazon CloudWatch Events, is added as an event notification feature of Amazon CloudWatch. |

| 2016-02-24 | Support for Amazon EC2 Auto Scaling lifecycle hooks as events. |

| 2016-03-30 | Support for Amazon Simple Queue Service (SQS) as event rule targets. |

| 2016-04-19 | Scheduled events can now be defined in Cron format and Rate format on a per-minute basis. |

| 2016-09-09 | Support for AWS CodeDeploy as events. |

| 2016-11-14 | Support for Amazon EBS as events. |

| 2016-11-18 | Support for AWS Trusted Advisor as events. |

| 2016-11-21 | Support for Amazon Elastic Container Service (ECS) as events. |

| 2016-12-01 | Support for AWS Health as events. |

| 2017-03-07 | Support for Amazon EMR as events. Support for Amazon EC2 instance commands and AWS Step Functions state machines as event rule targets. |

| 2017-06-29 | Support for cross-account event delivery. Support for AWS CodePipeline and Amazon Inspector as event rule targets. |

| 2017-08-03 | Support for AWS CodeCommit and AWS CodeBuild as events. |

| 2017-09-08 | Support for AWS CodePipeline and AWS Glue as events. Support for AWS Batch as event rule targets. |

| 2017-12-13 | Support for AWS CodeBuild as event rule targets. |

| 2018-06-28 | It becomes possible to establish a private connection between Amazon VPC and Amazon EventBridge using Interface VPC Endpoints (AWS PrivateLink). |

| 2019-03-21 | It becomes possible to tag event rules. |

| 2019-07-11 | Amazon EventBridge is announced as a standalone AWS service, an extension of Amazon CloudWatch Events, adding SaaS partner integration and reaching General Availability (GA). |

| 2019-10-07 | AWS CloudFormation supports Amazon EventBridge resources. |

| 2019-11-21 | Amazon Elastic Container Registry (ECR) is supported as an event. |

| 2019-12-01 | Schema management and code binding are supported. |

| 2020-02-10 | Content-based filtering becomes available for event patterns in event rules. |

| 2020-02-24 | Tagging becomes available for the event bus. |

| 2020-04-30 | Schema Registry feature is added, supporting OpenAPI. |

| 2020-09-29 | Schema Registry supports JSON Schema Draft 4. |

| 2020-10-08 | Retry policies and dead-letter queues for targets are supported. |

| 2020-11-05 | Event archiving and replay functions are supported. |

| 2020-11-24 | Server-side encryption (SSE) is supported on the event bus. |

| 2021-03-03 | Amazon EventBridge events can be traced with AWS X-Ray. |

| 2021-03-04 | API Destinations are supported. |

| 2021-04-15 | Cross-region event transmission is supported on the event bus. |

| 2021-05-19 | It becomes possible to send and receive events between event buses in the same account and region. |

| 2021-07-15 | AWS Glue workflows are supported as targets for event rules. |

| 2021-08-30 | EC2 Image Builder is supported as a target for events and event rules. |

| 2021-09-03 | Schema Registry supports the function to detect cross-account events from the event bus, and automatically add them. |

| 2021-11-18 | Amazon DevOps Guru is supported as an event. |

| 2022-03-11 | Sandbox function is supported in the Amazon EventBridge console (AWS Management Console). |

| 2022-04-07 | Global endpoints are supported. |

Updates as Amazon CloudWatch Events have been primarily centered around event rules, supporting execution on event receipt and scheduling from the beginning, and supporting receipt of events and transmission as targets for many AWS services.

Since becoming independent as Amazon EventBridge, in addition to the support for AWS services in event rules, new features have also been added, such as SaaS partner collaboration, API destinations, event buses, archive and replay, global endpoints, schema registries, and sandbox.

Differences between Amazon EventBridge and Amazon CloudWatch Events

Amazon EventBridge has been created by extending the functionalities of Amazon CloudWatch Events, which was a part of Amazon CloudWatch, as described in the aforementioned timeline.The service name for Amazon CloudWatch Events has been provided as

events (CloudFormation is AWS::Events) from the beginning in AWS SDK and AWS CLI, so the description for Amazon EventBridge and Amazon CloudWatch Events' SDK, CLI, and CloudFormation is similar.Therefore, if you have been using the service name

events (CloudFormation is AWS::Events), there is no need to make any changes even if you were using the API, CLI, SDK, or CloudFormation of Amazon CloudWatch Events to migrate to Amazon EventBridge.However, the class name in SDK has changed from CloudWatchEvents to EventBridge, so there will be no impact if you are calling the client with

events, but if you are using it from the class name level, a name change will be necessary with the SDK update.The main features that Amazon CloudWatch Events had are now part of Amazon EventBridge's Event Rule and Event Bus.

Amazon EventBridge has extended and inherited the Event Rule and Event Bus of Amazon CloudWatch Events, and in addition, new features such as SaaS partner collaboration, API Destinations, Archive and Replay, Global Endpoints, Schema Registries, and Sandbox have been added.

Current Overview, Functions, Features of Amazon EventBridge

From here, I will introduce the current feature list and overview of Amazon EventBridge.Events

Amazon EventBridge is an AWS service that detects events generated from AWS services, coordinates events with other AWS services according to rule conditions, and creates custom events. It's designed to detect, control, and operate events.An event in AWS shares common top-level fields and structures, which are JSON objects that notify the state by customizing some fields under the top-level fields.

Events that occur from AWS services inform about changes in the AWS environment and are generated when the state of AWS service resources changes.

On the other hand, custom events allow users to insert their own fields and data into the common field structure of the event to generate an event.

There are many AWS service events supported by Amazon EventBridge, so please refer to the following link for more details.

Events from AWS services - Amazon EventBridge

Event Patterns

Here is an example of a JSON object about the common field structure of events.The parts that can be changed in the custom events described later are denoted as

(can be customized).{

"version": "##Event version: As of the time of writing this article, '0'##",

"id": "##Event ID: ID in the form '12345678-1234-1234-1234-123456789012'##",

"detail-type": "##Event detail type: Often a string that indicates the summary of the event (can be customized)##",

"source": "##Event source: The source of events generated by AWS services is in the form 'aws.XXXXX' (can be customized)##",

"account": "##The AWS account ID where the event was generated: in the form '123456789012'##",

"time": "##Time the event was generated (UTC): in the form 'YYYY-MM-DDThh:mm:ssZ' (can be customized)##",

"region": "##The region string where the event was generated: such as 'ap-northeast-1'##",

"resources": [##Target resources of the event: if there are target resources, they come in an array format of strings (can be customized)##],

"detail": {

##Event details: Different fields in each AWS service (can be customized)##

}

}

Next, I will show an example of an event that occurs with the PutObject action of Amazon S3.{

"version": "0",

"id": "12345678-1234-1234-1234-123456789012",

"detail-type": "Object Created",

"source": "aws.s3",

"account": "123456789012",

"time": "2023-01-01T00:00:00Z",

"region": "ap-northeast-1",

"resources": ["arn:aws:s3:::hidekazu-konishi.com"],

"detail": {

"version": "0",

"bucket": {

"name": "hidekazu-konishi.com"

},

"object": {

"key": "index.html",

"size": 10,

"etag": "12345678901234567890123456789012",

"version-id": "12345678901234567890123456789012",

"sequencer": "123456789012345678"

},

"request-id": "1234567890123456",

"requester": "123456789012",

"source-ip-address": "10.0.0.1",

"reason": "PutObject"

}

}

Sending Custom Events

Custom events are generated by users inserting their own fields and data into the common field structure of events.While events that occur from AWS services mainly indicate changes in the state of AWS resources, custom events can be used for various purposes depending on the objective.

Custom events are generated via the AWS CLI, or the AWS SDK API.

Next, I will describe the format of the JSON parameters when sending custom events with the AWS CLI's put-events command.

We will talk about the event bus (Event Bus), which is specified by one of the parameters, EventBusName, later.

[

{

"Time": "##Timestamp in RFC3339 compliant format. If not specified, the time of sending will be used.##",

"Source": "##Any string. If it follows the format used by AWS services, it will be in the format '[alphanumeric lower case].[alphanumeric lower case].~'.##",

"Resources": [

"##Resource name. Multiple can be specified in the array. If not specified, it is sent as an empty array.##",

...

],

"DetailType": "##Any string. If it follows the DetailType used by AWS services, it becomes a string that represents the summary of the event.##",

"Detail": "##Any JSON string. Since this parameter itself is in JSON, double quotation marks need to be escaped.##",

"EventBusName": "##Specify the EventBusName or EventBus ARN to send the custom event. If not specified, the default event bus will be used.##",

"TraceHeader": "##If using X-Ray, specify the Tracing header.##"

},

...

]

Next, I will show an example of describing the JSON pattern of custom events in a JSON file, and generating custom events from the JSON file with the AWS CLI's put-events command.[ho2k_com@ho2k-com ~]$ vi entries.json

[ho2k_com@ho2k-com ~]$ cat entries.json

[

{

"Time": "2023-01-01T00:00:00Z",

"Source": "custom.sample",

"Resources": [

"sample-resource-a",

"sample-resource-b"

],

"DetailType": "Custom Sample Event",

"Detail": "{ \"sample-parameter-key1\": \"sample-parameter-value1\", \"sample-parameter-key2\": \"sample-parameter-value2\" }",

"EventBusName": "arn:aws:events:ap-northeast-1:123456789012:event-bus/CustomSampleEventBus"

}

]

[ho2k_com@ho2k-com ~]$ aws events put-events --entries file://entries.json

Then, the following is an example of the JSON of a custom event that occurs when this AWS CLI is run in the ap-northeast-1 region of AWS account 123456789012.{

"version": "0",

"id": "12345678-1234-1234-1234-123456789000",

"detail-type": "Custom Sample Event",

"source": "custom.sample",

"account": "123456789012",

"time": "2023-01-01T00:00:00Z",

"region": "ap-northeast-1",

"resources": ["sample-resource-a", "sample-resource-b"],

"detail": {

"sample-parameter-key1": "sample-parameter-value1",

"sample-parameter-key2": "sample-parameter-value2"

}

}

Event Bus

An Event Bus is a pipeline that receives events, and the subsequent Event Rules process events sent to the associated Event Bus based on the rules.There are two types of Event Buses: the default Event Bus that is provided initially, and the Custom Event Bus that users can create on their own.

As mentioned earlier, a Custom Event can specify which Event Bus to send to, but events without a specified Event Bus are all sent to the default Event Bus in the account/region where the event occurred.

Therefore, if you are only using the default Event Bus, you may receive unwanted events and your Event Rules may become complicated trying to manage these unwanted events.

For this reason, you create a Custom Event Bus to receive only the events that you are evaluating with Event Rules, based on your needs and functionalities.

Event Rule

There are two types of Event Rules: those that execute upon event receipt, and those that execute on a schedule.Event rules that are executed by receiving events

This type executes when the event received from the associated Event Bus matches the defined event pattern, and sends the event to the target mentioned later.For example, the following shows an example of an event pattern definition and a sample event to be evaluated for this type of Event Rule.

The sample event to be evaluated shown here matches the event pattern definition example.

| Sample Event to Be Evaluated | Example of Event Rule's Event Pattern Definition |

|---|---|

{

"version": "0",

"id": "12345678-1234-1234-1234-123456789012",

"detail-type": "Object Created",

"source": "aws.s3",

"account": "123456789012",

"time": "2023-01-01T00:00:00Z",

"region": "ap-northeast-1",

"resources": ["arn:aws:s3:::hidekazu-konishi.com"],

"detail": {

"version": "0",

"bucket": {

"name": "hidekazu-konishi.com"

},

"object": {

"key": "index.html",

"size": 10,

"etag": "12345678901234567890123456789012",

"version-id": "12345678901234567890123456789012",

"sequencer": "123456789012345678"

},

"request-id": "1234567890123456",

"requester": "123456789012",

"source-ip-address": "10.0.0.1",

"reason": "PutObject"

}

}

|

{

"source": [

"aws.s3"

],

"detail-type": [{

"anything-but": [

"AWS API Call via CloudTrail"

]

}],

"detail": {

"bucket": {

"name": [{

"anything-but": {

"prefix": "www."

}

}]

},

"object": {

"key": [{

"prefix": "index."

}],

"size": [{

"numeric": [">", 0]

}]

},

"source-ip-address": [{

"cidr": "10.0.0.0/24"

}],

"reason": [{

"exists": true

}]

}

}

|

Content Filtering by Simple Matching

First, the description that defines whether it simply matches the value of the field corresponds to the following part in the above example.The method of describing pattern matching is to enter the condition in the form of an array in the part of the value of the field to be evaluated.

"source": [

"aws.s3"

],

Next, I will look at the evaluation methods that "prefix", "anything-but", "numeric", "cidr", and "exists" mean, referring to the above example.Content Filtering by 'prefix'

If you use"prefix" for the value of the field, you can define that it matches the prefix of the specified string (it matches from the beginning).In the above example, the following part applies, indicating that it matches from the beginning with "

index." (index.html, index.php, index.do, etc. match). "object": {

"key": [{

"prefix": "index."

}],

Content Filtering by 'anything-but'

If you use"anything-but" for the value of the field, you can define that it matches all values except the specified one.In the above example, the following part applies, indicating that any value other than "

AWS API Call via CloudTrail" matches (Object Created, Object Deleted, etc. match). "detail-type": [{

"anything-but": [

"AWS API Call via CloudTrail"

]

}],

Content Filtering by 'prefix' and 'anything-but'

Also, you can use"prefix" and "anything-but" in combination.In the above example, the following part applies, indicating that a string that does not start with "

www." matches (those starting with en., api., etc. match). "bucket": {

"name": [{

"anything-but": {

"prefix": "www."

}

}]

},

Content Filtering by 'numeric'

If you use"numeric" for the value of the field, you can define that it matches when the comparison result by the comparison operator is true if the value is a number.In the above example, the following part applies, indicating that it matches when the number is greater than 0 (you can use the comparison operators

"=", ">", "<", ">=", "<="). "size": [{

"numeric": [">", 0]

}]

Content Filtering with "cidr"

By using the value"cidr" in the field, you can define a match for the specified IPv4 and IPv6 addresses in CIDR format.In the example above, the relevant part indicates that it matches the CIDR range of "10.0.0.0/24" (

10.0.0.0 to 10.0.0.255 are a match). "source-ip-address": [{

"cidr": "10.0.0.0/24"

}],

Content Filtering with "exists"

By using the value"exists" in the field, you can define an evaluation of the presence or absence of a value.In the example above, the relevant part indicates that it matches when a value exists (in the case of

"exists": false, it matches when it does not exist). "reason": [{

"exists": true

}]

Event rules of the type that execute upon event reception can be defined by setting an event pattern. When the received event matches the pattern, it sends the received event or a customized event to the target mentioned later.Event rules that are executed according to a schedule

The scheduled type executes according to a schedule defined in either Cron or Rate format, sending events to the target mentioned later.About Cron Format Description

The format of the Cron style is as follows.cron([minute] [hour] [day] [month] [day of the week] [year])Here are some examples of how to write in Cron format.

cron(0/15 * * * ? *) # Execute every 15 minutes. cron(0/15 0-9 * * ? *) # Execute every 15 minutes from 0:00-9:45 (UTC+0) [from 9:00-18:45 (UTC+9, JST) in Japan time]. cron(0 0 * * ? *) # Execute at 0:00 every day (UTC+0) [at 9:00 every day (UTC+9, JST) in Japan time]. cron(0 0 1 * ? *) # Execute at 0:00 on the 1st of every month (UTC+0) [at 9:00 on the 1st of every month (UTC+9, JST) in Japan time]. cron(0 15 L * ? *) # Execute at 15:00 on the last day of each month (UTC+0) [at 0:00 on the 1st day of each month (UTC+9, JST) in Japan time]. cron(0 0 ? * MON-FRI *) # Execute at 0:00 every day from Monday to Friday (UTC+0) [at 08:00 every day from Monday to Friday (UTC+9, JST) in Japan time]. cron(0 23 ? * SUN-THU *) # Execute at 23:00 every day from Sunday to Thursday (UTC+0) [at 08:00 every day from Monday to Friday (UTC+9, JST) in Japan time].The important thing to note is that the time dealt with in EventBridge is UTC and not Local Time(like JST).

Also, in the Cron format notation, be aware that if you specify "day of the week", "day" becomes "?", and if you specify "day", "day of the week" becomes "?".

About Rate Format Description

The format of the Rate style is as follows.rate([value] [unit])The available units are

minute, minutes, hour, hours, day, days.Here are some examples of how to write in Rate format.

rate(5 minutes) # Execute every 5 minutes rate(12 hours) # Execute every 12 hours rate(1 day) # Execute every day rate(7 days) # Execute every 7 days

About Events Occurring When Scheduled Execution is Performed

In event rules of the type to be executed on a schedule, an event occurs when the schedule is executed.This event can be specified as the event to be sent to the resource or endpoint set in the target mentioned later.

Here is an example of an event that occurs when scheduled execution is performed.

The resources contained in

"resources" are the ARN of the event rule that performed the scheduled execution.{

"version": "0",

"id": "12345678-1234-1234-1234-123456789012",

"detail-type": "Scheduled Event",

"source": "aws.events",

"account": "123456789012",

"time": "2023-01-01T00:00:00Z",

"region": "ap-northeast-1",

"resources": [

"arn:aws:events:ap-northeast-1:123456789012:rule/SampleEventRule"

],

"detail": {}

}

Event rules of the type to be executed on a schedule can define a schedule in either Cron or Rate format, and can send either a scheduled execution event or a custom event to the target mentioned later.Targets

A target is a resource or endpoint that sends events from the event rule.The conditions under which an event is sent to a target are as follows:

- The event pattern defined in the event rule of the type to be executed when an event is received matches the event received from the event bus

- The schedule defined in the event rule of the type to be executed on a schedule matches the date and time

- For event rules of the type to be executed when an event is received, the event can be the entire event that matched the event pattern definition, a part of it, a constant (JSON text), or an event defined by an input transformer

- For event rules of the type to be executed on a schedule, the event can be the entire scheduled execution event, a part of it, a constant (JSON text), or an event defined by an input transformer

A constant (JSON text) is a method of sending fixed JSON text for a field as an event to a target.

The input transformer is explained in the next section.

Input Transformer

The Input Transformer is a function to convert the JSON structure and the contents of fields before sending an event that matches an event pattern in an event rule, or a constant (JSON text), to a target.In the input transformer, the Input Path is used to change the variable name, and a template is used to define the output JSON structure.

Here is an example of converting an event using the input path and template in the input transformer and outputting it.

| Event Before Conversion | Input Transformer |

|---|---|

{

"version": "0",

"id": "12345678-1234-1234-1234-123456789012",

"detail-type": "Object Created",

"source": "aws.s3",

"account": "123456789012",

"time": "2023-01-01T00:00:00Z",

"region": "ap-northeast-1",

"resources": ["arn:aws:s3:::hidekazu-konishi.com"],

"detail": {

"version": "0",

"bucket": {

"name": "hidekazu-konishi.com"

},

"object": {

"key": "index.html",

"size": 10,

"etag": "12345678901234567890123456789012",

"version-id": "12345678901234567890123456789012",

"sequencer": "123456789012345678"

},

"request-id": "1234567890123456",

"requester": "123456789012",

"source-ip-address": "10.0.0.1",

"reason": "PutObject"

}

}

|

* Input Path{

"bucket_name" : "$.detail.bucket.name",

"object_key": "$.detail.object.key",

"action" : "$.detail.reason",

"datetime" : "$.time"

}

* Input Transformer Template {

"bucket_name" : "<bucket_name>",

"object_key": "<object_key>",

"action" : "<action>",

"datetime" : "<datetime>"

}

* Output Result {

"bucket_name" : "hidekazu-konishi.com",

"object_key": "index.html",

"action" : "PutObject",

"datetime" : "2023-01-01T00:00:00Z"

}

|

Available AWS Services and API Destinations as Targets

Since there are numerous AWS services supported as targets by Amazon EventBridge, please refer to the following link.Amazon EventBridge targets - Amazon EventBridge

Among them, as of the writing of this article, the main available AWS services and API destinations as targets are as follows.

| Service Name | Cross-Region Sending | Cross-Account Sending |

|---|---|---|

| Event Bus | Yes | Yes |

| API Destinations (including API sending partners) | Yes | Yes |

| API Gateway | Yes | No |

| Batch Job Queue | No | No |

| CloudWatch Log Group | No | No |

| CodeBuild Project | No | No |

| CodePipeline | No | No |

| EC2 CreateSnapshot API Call | No | No |

| EC2 Image Builder | No | No |

| EC2 RebootInstances API Call | No | No |

| EC2 StopInstances API Call | No | No |

| EC2 TerminateInstances API Call | No | No |

| ECS Task | No | No |

| Firehose Delivery Stream | No | No |

| Glue Workflow | No | No |

| Incident Manager Response Plan | No | No |

| Inspector Assessment Template | No | No |

| Kinesis Stream | No | No |

| Lambda Function | No | No |

| Redshift Cluster | No | No |

| SageMaker Pipeline | No | No |

| SNS Topic | No | No |

| SQS Queue | No | No |

| Step Functions state machine | No | No |

| Systems Manager Automation | No | No |

| Systems Manager OpsItem | No | No |

| Systems Manager Run Command | No | No |

In order to send and receive events across regions and accounts, it's necessary to configure policies on both the sending and receiving sides.

First, in the resource-based policy of the EventBus of the account/region receiving the events, you allow the

events:PutEvents from the event rules of the account/region sending the events.Then, in the permission policy of the IAM role associated with the event rules of the account/region sending the events, you allow the

events:PutEvents to the ARN of the EventBus of the account/region receiving the events.By having the

events:PutEvents action allowed in the policies of both the receiving and sending sides, you can send and receive events across regions and accounts.API Destinations is a feature that allows you to register APIs with EventBridge and specify them as targets, and you can register and use APIs provided by API Destination partners, or APIs you have developed yourself.

EventBridge's API Destinations supports basic, OAuth, and API key authentication methods.

As the API Destinations target is an API, there are no cross-region or cross-account transmission restrictions.

API Gateway does not allow you to specify cross-account transmission, but you can specify cross-region API Gateways as targets using AWS CLI or AWS SDK (cannot be specified from the EventBridge console).

However, you can also register Amazon API Gateway as an API in API Destinations and specify it as a target for EventBridge, enabling cross-account transmission in addition to cross-region.

Sandbox

The Sandbox is a feature of the EventBridge console (AWS Management Console) that allows you to test event patterns and input transformers used in EventBridge rules.The Sandbox allows you to mainly test the following:

- Test whether event patterns defined in EventBridge rules match sample events or custom input events.

- Test the JSON input path, template, and output defined in the Input Transformer using sample events or custom input events.

Archives and Replays

Archives is a feature that allows you to store events received on a specified EventBus.You can choose to archive only events that match the event pattern you define (or all events), and you can specify the storage period (or unlimited).

Archived events can be replayed.

When replaying archived events, you can specify the destination of the replay from the event rules associated with the event bus (or specify all rules), and you can partially replay by specifying the time frame of the start and end times of the replay, which determines the range of event occurrence times of the archived events.

Global Endpoints

Global Endpoints in EventBridge configures your event bus for fault tolerance across two regions.To configure global endpoints, you need to set the following:

- The event buses in your primary and secondary regions.

The event bus names need to be the same in both regions. - Amazon Route 53 health checks to determine failover.

Although you can set your own Amazon Route 53 health checks, a CloudFormation template is available that uses AWS/Events Namespace's IngestionToInvocationStartLatency metric to determine using Amazon CloudWatch metrics. - Enable or disable event replication.

Event replication asynchronously replicates events from the primary region to the secondary region, so the order of event transmission is not guaranteed.

Schemas and Schema Registries

Schemas define the structure of events handled in EventBridge.While there are schemas already available, generated by AWS services, users can also create and register custom schemas.

Schema Registries are logical groups for classifying and organizing schemas registered in EventBridge.

From the outset, EventBridge comes with AWS Event Schema Registries, Discovered Schema Registries, and Custom Schema Registries.

Schema Registries can also be newly created for registering user's custom schemas.

Among these, newly discovered schemas are automatically registered in the Discovered Schema Registry by enabling Schema Discovery on the event bus.

Code Bindings

Code Bindings can be downloaded from the registered schemas in the Schema Registry.Using Code Bindings, when developing with programming languages such as Golang, Java, Python, TypeScript in IDEs etc., events can be handled as objects within the code, and features like validation and autocomplete can be used.

Code Bindings can be downloaded from the EventBridge console, as well as from IDE plugins like AWS Toolkit for Visual Studio Code.

Receiving Events from SaaS Partners

If SaaS partners are integrated with Amazon EventBridge, you can receive events from the services or applications provided by the SaaS partners using event patterns prepared for each SaaS partner.To receive events from SaaS partners, create a partner event source on each SaaS partner's website, etc., and associate it with the event bus created for the SaaS partner.

EventBridge's console for creating event rules also provides event patterns for each SaaS partner that you can select and customize.

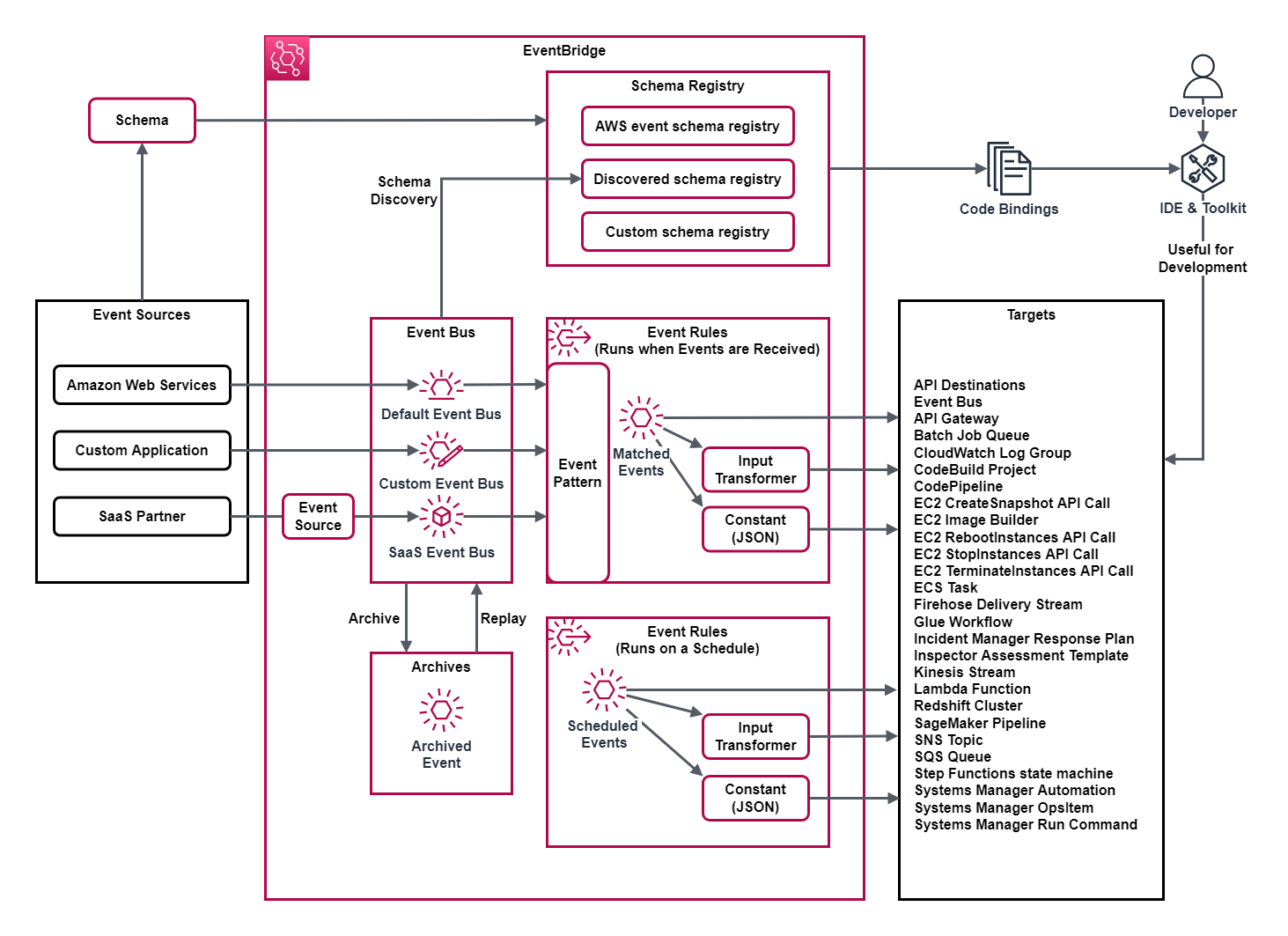

Overview Diagram of Amazon EventBridge Features

The relationship of the functions of Amazon EventBridge can be summarized in the following overview diagram.

References:

Tech Blog with curated related content

AWS Documentation(Amazon EventBridge)

AWS Documentation(Amazon CloudWatch Events)

Summary

In this post, I have created a timeline for Amazon EventBridge and looked at the list and overview of its features.Amazon EventBridge emerged as an expansion of the Amazon CloudWatch's event notification feature, the Amazon CloudWatch Events.

Building on the Event Rule function that Amazon CloudWatch Events had, Amazon EventBridge added features such as customizable Event Rules, Event Bus, and SaaS partner integration, enabling more flexible event control and wider event collaboration.

Covering both event-driven triggers for automation and scheduled executions, Amazon EventBridge is a service you'll inevitably encounter when designing and developing with AWS services.

Therefore, updates to Amazon EventBridge will undoubtedly have some impact on services leveraging AWS in the future.

I would like to continue watching how Amazon EventBridge will provide features in the future.

Furthermore, there is also a timeline of the entire history of AWS services, including services other than Amazon EventBridge, so please take a look if you're interested.

AWS History and Timeline - Almost All AWS Services List, Announcements, General Availability(GA)

Written by Hidekazu Konishi