Using Amazon Bedrock to repeatedly generate images with Stable Diffusion XL via Claude 3.5 Sonnet until requirements are met

First Published:

Last Updated:

I have conducted some simple trials and comparisons of the image recognition capabilities of these Anthropic Claude models, particularly focusing on their OCR (Optical Character Recognition) performance. You can find the details in the article below:

Using Amazon Bedrock for titling, commenting, and OCR (Optical Character Recognition) with Claude 3 Haiku

Using Amazon Bedrock for titling, commenting, and OCR (Optical Character Recognition) with Claude 3 Sonnet

Using Amazon Bedrock for titling, commenting, and OCR (Optical Character Recognition) with Claude 3 Opus

Using Amazon Bedrock for titling, commenting, and OCR (Optical Character Recognition) with Claude 3.5 Sonnet

In this article, I will introduce an example of using Amazon Bedrock to further utilize the image recognition capabilities of Anthropic Claude 3.5 Sonnet to verify and regenerate images created by Stability AI Stable Diffusion XL (SDXL).

This attempt also aims to reduce the amount of human visual inspection work by automatically determining whether generated images meet requirements.

* The source code published in this article and other articles by this author was developed as part of independent research and is provided 'as is' without any warranty of operability or fitness for a particular purpose. Please use it at your own risk. The code may be modified without prior notice.

* This article uses AWS services on an AWS account registered individually for writing.

* The Amazon Bedrock Models used for writing this article were executed on 2024-07-20 (JST) and are based on the following End user license agreement (EULA) at that time:

Anthropic Claude 3.5 Sonnet(anthropic.claude-3-5-sonnet-20240620-v1:0): Anthropic on Bedrock - Commercial Terms of Service(Effective: January 2, 2024)

Stability AI Stable Diffusion XL(stability.stable-diffusion-xl-v1): STABILITY AMAZON BEDROCK END USER LICENSE AGREEMENT(Last Updated: April 29, 2024)

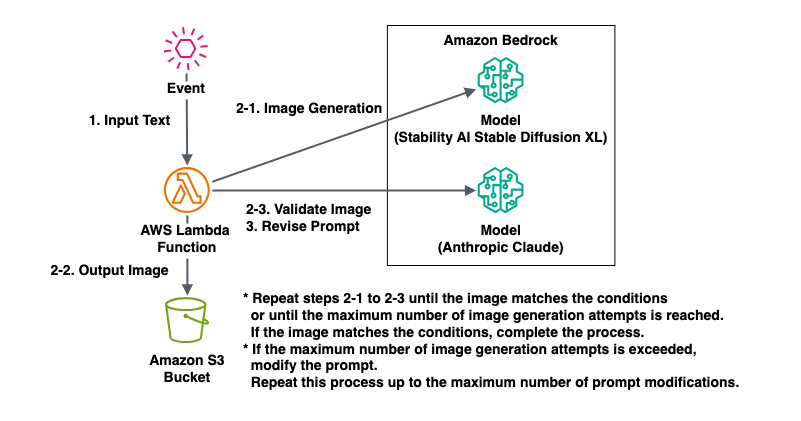

Architecture Diagram and Process Flow

The architecture diagram to realize this theme is as follows:

Here's a detailed explanation of this process flow:

1. Input an event containing prompts and parameters. 2-1. Execute the SDXL model on Amazon Bedrock with the input prompt instructing image creation. 2-2. Save the generated image to Amazon S3. 2-3. Execute the Claude 3.5 Sonnet model on Amazon Bedrock for the image saved in Amazon S3 to verify if it meets the requirements of the prompt that instructed image creation. * If it's not deemed suitable for the requirements of the prompt that instructed image creation, repeat processesThe key point in this process flow is the modification of the prompt instructing image creation by the Claude 3.5 Sonnet model.2-1to2-3for the specified number of executions with the same prompt. * If it's deemed suitable for the requirements of the prompt that instructed image creation, output that image as the result. 3. If the number of modified prompt executions has not been exceeded and the number of times deemed unsuitable for the requirements of the prompt that instructed image creation exceeds the number of executions with the same prompt, execute the Claude 3.5 Sonnet model on Amazon Bedrock to modify the prompt instructing image creation to one that is more likely to meet the requirements. Restart the process from2-1with this new prompt instructing image creation. * If the number of modified prompt executions is exceeded, end the process as an error.

If the prompt instructing image creation is easily understandable to AI, there's a high possibility that an image meeting the requirements will be output after several executions.

However, if the prompt instructing image creation is difficult for AI to understand, it's possible that an image meeting the requirements may not be output.

Therefore, when the specified number of executions with the same prompt is exceeded, I included a process to execute the Claude 3.5 Sonnet model on Amazon Bedrock and modify the prompt instructing image creation to an optimized one.

Implementation Example

Format of the Input Event

{

"prompt": "[Initial prompt for image generation]",

"max_retry_attempts": [Maximum number of attempts to generate an image for each prompt],

"max_prompt_revisions": [Maximum number of times to revise the prompt],

"output_s3_bucket_name": "[Name of the S3 bucket to store generated images]",

"output_s3_key_prefix": "[Prefix for the S3 key of generated images]",

"claude_validate_temperature": [Temperature parameter for Claude model during image validation (0.0 to 1.0)],

"claude_validate_top_p": [Top-p parameter for Claude model during image validation (0.0 to 1.0)],

"claude_validate_top_k": [Top-k parameter for Claude model during image validation],

"claude_validate_max_tokens": [Maximum number of tokens generated by Claude model during image validation],

"claude_revise_temperature": [Temperature parameter for Claude model during prompt revision (0.0 to 1.0)],

"claude_revise_top_p": [Top-p parameter for Claude model during prompt revision (0.0 to 1.0)],

"claude_revise_top_k": [Top-k parameter for Claude model during prompt revision],

"claude_revise_max_tokens": [Maximum number of tokens generated by Claude model during prompt revision],

"sdxl_cfg_scale": [CFG scale for Stable Diffusion XL model],

"sdxl_steps": [Number of inference steps for Stable Diffusion XL model],

"sdxl_width": [Width of the image generated by Stable Diffusion XL model (in pixels)],

"sdxl_height": [Height of the image generated by Stable Diffusion XL model (in pixels)],

"sdxl_seed": [Random seed used by Stable Diffusion XL model (for reproducibility, random if not specified)]

}

Example of Input Event

{

"prompt": "A serene landscape with mountains and a lake",

"max_retry_attempts": 5,

"max_prompt_revisions": 3,

"output_s3_bucket_name": "your-output-bucket-name",

"output_s3_key_prefix": "generated-images",

"claude_validate_temperature": 1.0,

"claude_validate_top_p": 0.999,

"claude_validate_top_k": 250,

"claude_validate_max_tokens": 4096,

"claude_revise_temperature": 1.0,

"claude_revise_top_p": 0.999,

"claude_revise_top_k": 250,

"claude_revise_max_tokens": 4096,

"sdxl_cfg_scale": 30,

"sdxl_steps": 150,

"sdxl_width": 1024,

"sdxl_height": 1024,

"sdxl_seed": 0

}

Source Code

The source code implemented this time is as follows:

# #Event Sample

# {

# "prompt": "A serene landscape with mountains and a lake",

# "max_retry_attempts": 5,

# "max_prompt_revisions": 3,

# "output_s3_bucket_name": "your-output-bucket-name",

# "output_s3_key_prefix": "generated-images",

# "claude_validate_temperature": 1.0,

# "claude_validate_top_p": 0.999,

# "claude_validate_top_k": 250,

# "claude_validate_max_tokens": 4096,

# "claude_revise_temperature": 1.0,

# "claude_revise_top_p": 0.999,

# "claude_revise_top_k": 250,

# "claude_revise_max_tokens": 4096,

# "sdxl_cfg_scale": 30,

# "sdxl_steps": 150,

# "sdxl_width": 1024,

# "sdxl_height": 1024,

# "sdxl_seed": 0

# }

import boto3

import json

import base64

import os

import sys

from io import BytesIO

import datetime

import random

region = os.environ.get('AWS_REGION')

bedrock_runtime_client = boto3.client('bedrock-runtime', region_name=region)

s3_client = boto3.client('s3', region_name=region)

def claude3_5_invoke_model(input_prompt, image_media_type=None, image_data_base64=None, model_params={}):

messages = [

{

"role": "user",

"content": [

{

"type": "text",

"text": input_prompt

}

]

}

]

if image_media_type and image_data_base64:

messages[0]["content"].insert(0, {

"type": "image",

"source": {

"type": "base64",

"media_type": image_media_type,

"data": image_data_base64

}

})

body = {

"anthropic_version": "bedrock-2023-05-31",

"max_tokens": model_params.get('max_tokens', 4096),

"messages": messages,

"temperature": model_params.get('temperature', 1.0),

"top_p": model_params.get('top_p', 0.999),

"top_k": model_params.get('top_k', 250),

"stop_sequences": ["\n\nHuman:"]

}

response = bedrock_runtime_client.invoke_model(

modelId='anthropic.claude-3-5-sonnet-20240620-v1:0',

contentType='application/json',

accept='application/json',

body=json.dumps(body)

)

response_body = json.loads(response.get('body').read())

response_text = response_body["content"][0]["text"]

return response_text

def sdxl_invoke_model(prompt, model_params={}):

seed = model_params.get('seed', 0)

if seed == 0:

seed = random.randint(0, 4294967295)

body = {

"text_prompts": [{"text": prompt}],

"cfg_scale": model_params.get('cfg_scale', 10),

"steps": model_params.get('steps', 50),

"width": model_params.get('width', 1024),

"height": model_params.get('height', 1024),

"seed": seed

}

print(f"SDXL model parameters: {body}")

response = bedrock_runtime_client.invoke_model(

body=json.dumps(body),

modelId="stability.stable-diffusion-xl-v1",

contentType="application/json",

accept="application/json"

)

response_body = json.loads(response['body'].read())

image_data = base64.b64decode(response_body['artifacts'][0]['base64'])

print(f"Image generated successfully with seed: {seed}")

return image_data

def save_image_to_s3(image_data, bucket, key):

s3_client.put_object(

Bucket=bucket,

Key=key,

Body=image_data

)

print(f"Image saved to S3: s3://{bucket}/{key}")

def validate_image(image_data, prompt, claude_validate_params):

image_base64 = base64.b64encode(image_data).decode('utf-8')

input_prompt = f"""Does this image match the following prompt? Prompt: {prompt}.

Please answer in the following JSON format:

{{"result":"", "reason":""}}

Ensure your response can be parsed as valid JSON. Do not include any explanations, comments, or additional text outside of the JSON structure."""

validation_result = claude3_5_invoke_model(input_prompt, "image/png", image_base64, claude_validate_params)

try:

print(f"validation Result: {validation_result}")

parsed_result = json.loads(validation_result)

is_valid = parsed_result['result'].upper() == 'YES'

print(f"Image validation result: {is_valid}")

print(f"Validation reason: {parsed_result['reason']}")

return is_valid

except json.JSONDecodeError:

print(f"Error parsing validation result: {validation_result}")

return False

def revise_prompt(original_prompt, claude_revise_params):

input_prompt = f"""Revise the following image generation prompt to optimize it for Stable Diffusion, incorporating best practices:

{original_prompt}

Please consider the following guidelines in your revision:

1. Be specific and descriptive, using vivid adjectives and clear nouns.

2. Include details about composition, lighting, style, and mood.

3. Mention specific artists or art styles if relevant.

4. Use keywords like "highly detailed", "4k", "8k", or "photorealistic" if appropriate.

5. Separate different concepts with commas.

6. Place more important elements at the beginning of the prompt.

7. Use weights (e.g., (keyword:1.2)) for emphasizing certain elements if necessary.

8. If the original prompt is not in English, translate it to English.

Your goal is to create a clear, detailed prompt that will result in a high-quality image generation with Stable Diffusion.

Please provide your response in the following JSON format:

{{"revised_prompt":""}}

Ensure your response can be parsed as valid JSON. Do not include any explanations, comments, or additional text outside of the JSON structure."""

revised_prompt_json = claude3_5_invoke_model(input_prompt, model_params=claude_revise_params)

print(f"Original prompt: {original_prompt}")

print(f"Revised prompt JSON: {revised_prompt_json.strip()}")

try:

parsed_result = json.loads(revised_prompt_json)

revised_prompt = parsed_result['revised_prompt']

print(f"Parsed revised prompt: {revised_prompt}")

return revised_prompt

except json.JSONDecodeError:

print(f"Error parsing revised prompt result: {revised_prompt_json}")

return original_prompt

def lambda_handler(event, context):

try:

initial_prompt = event['prompt']

prompt = initial_prompt

max_retry_attempts = max(0, event.get('max_retry_attempts', 5) - 1)

max_prompt_revisions = max(0, event.get('max_prompt_revisions', 3) - 1)

output_s3_bucket_name = event['output_s3_bucket_name']

output_s3_key_prefix = event.get('output_s3_key_prefix', 'generated-images')

print(f"Initial prompt: {initial_prompt}")

print(f"Max retry attempts: {max_retry_attempts}")

print(f"Max prompt revisions: {max_prompt_revisions}")

# Model parameters

claude_validate_params = {

'temperature': event.get('claude_validate_temperature', 1.0),

'top_p': event.get('claude_validate_top_p', 0.999),

'top_k': event.get('claude_validate_top_k', 250),

'max_tokens': event.get('claude_validate_max_tokens', 4096)

}

claude_revise_params = {

'temperature': event.get('claude_revise_temperature', 1.0),

'top_p': event.get('claude_revise_top_p', 0.999),

'top_k': event.get('claude_revise_top_k', 250),

'max_tokens': event.get('claude_revise_max_tokens', 4096)

}

sdxl_params = {

'cfg_scale': event.get('sdxl_cfg_scale', 7),

'steps': event.get('sdxl_steps', 50),

"width": event.get('sdxl_width', 1024),

"height": event.get('sdxl_height', 1024),

"seed": event.get('sdxl_seed', 0)

}

print(f"Claude validate params: {claude_validate_params}")

print(f"Claude revise params: {claude_revise_params}")

print(f"SDXL params: {sdxl_params}")

# Generate start timestamp and S3 key

start_timestamp = datetime.datetime.now().strftime("%Y%m%d%H%M%S")

for revision in range(max_prompt_revisions + 1):

print(f"Starting revision {revision}")

for attempt in range(max_retry_attempts + 1):

print(f"Attempt {attempt} for generating image")

# Generate image with SDXL

image_data = sdxl_invoke_model(prompt, sdxl_params)

image_key = f"{output_s3_key_prefix}-{start_timestamp}-{revision:03d}-{attempt:03d}.png"

# Save image to S3

save_image_to_s3(image_data, output_s3_bucket_name, image_key)

# Validate image with Claude

is_valid = validate_image(image_data, initial_prompt, claude_validate_params)

if is_valid:

print("Valid image generated successfully")

return {

'statusCode': 200,

'body': json.dumps({

'status': 'SUCCESS',

'message': 'Image generated successfully',

'output_s3_bucket_url': f'https://s3.console.aws.amazon.com/s3/buckets/{output_s3_bucket_name}',

'output_s3_object_url': f'https://s3.console.aws.amazon.com/s3/object/{output_s3_bucket_name}?region={region}&prefix={image_key}'

})

}

# If max retry attempts reached and not the last revision, revise prompt

if revision < max_prompt_revisions:

print("Revising prompt")

prompt = revise_prompt(initial_prompt, claude_revise_params)

print("Failed to generate a valid image after all attempts and revisions")

return {

'statusCode': 400,

'body': json.dumps({

'status': 'FAIL',

'error': 'Failed to generate a valid image after all attempts and revisions'

})

}

except Exception as ex:

print(f'Exception: {ex}')

tb = sys.exc_info()[2]

err_message = f'Exception: {str(ex.with_traceback(tb))}'

print(err_message)

return {

'statusCode': 500,

'body': json.dumps({

'status': 'FAIL',

'error': err_message

})

}

The points of ingenuity in this source code include the following:- Implemented a mechanism to automate the cycle of image generation and validation, repeating until requirements are met

- Used Claude 3.5 Sonnet for validating generated images and revising prompts

- Used Stable Diffusion XL for high-quality image generation

- Included specific best practices for image generation in the prompt revision instructions

- Made image generation parameters (cfg_scale, steps, width, height, seed) customizable

- Made Claude 3.5 Sonnet invocation parameters (temperature, top_p, top_k, max_tokens) adjustable

- Automatically saved generated images to S3 bucket and returned the result URL

- Implemented appropriate error handling and logging to facilitate troubleshooting

- Used JSON format to structure dialogues with Claude, making result parsing easier

- Made maximum retry attempts and maximum prompt revisions configurable to prevent infinite loops

Execution Details and Results

An Example of Execution: Input Parameters

{

"prompt": "自然の中から見た夜景で、空にはオーロラと月と流星群があり、地上には海が広がって流氷が流れ、地平線から太陽が出ている無人の写真。",

"max_retry_attempts": 5,

"max_prompt_revisions": 5,

"output_s3_bucket_name": "ho2k.com",

"output_s3_key_prefix": "generated-images",

"claude_validate_temperature": 1,

"claude_validate_top_p": 0.999,

"claude_validate_top_k": 250,

"claude_validate_max_tokens": 4096,

"claude_revise_temperature": 1,

"claude_revise_top_p": 0.999,

"claude_revise_top_k": 250,

"claude_revise_max_tokens": 4096,

"sdxl_cfg_scale": 30,

"sdxl_steps": 150,

"sdxl_width": 1024,

"sdxl_height": 1024,

"sdxl_seed": 0

}

* The Japanese text set in the prompt above translates to the following meaning in English:"A night view from nature, with aurora, moon, and meteor shower in the sky, the sea spreading on the ground with drifting ice, and the sun rising from the horizon in an uninhabited photograph."

In this execution, I am attempting to optimize instructions given in Japanese sentences that are not optimized as prompts for Stable Diffusion XL through prompt modification by Claude 3.5 Sonnet.

The input parameters for this execution example include the following considerations:

max_retry_attemptsis set to 5 to increase the success rate of image generation.max_prompt_revisionsis set to 5, providing more opportunities to improve the prompt if needed.- Parameters for Claude model for image validation and revision (temperature, top_p, top_k, max_tokens) are finely set.

sdxl_cfg_scaleis set to 30 to increase fidelity to the prompt.sdxl_stepsis set to 150 to improve the quality of image generation.- The

seedused for image generation is set to be random, ensuring different images are generated each time.

An Example of Execution: Results

Generated Image

The final image that met the prompt requirements and passed verification in this trial is shown below.This image actually meets almost all the requirements of "自然の中から見た夜景で、空にはオーロラと月と流星群があり、地上には海が広がって流氷が流れ、地平線から太陽が出ている無人の写真。"(The meaning is "A night view from nature, with aurora, moon, and meteor shower in the sky, the sea spreading on the ground with drifting ice, and the sun rising from the horizon in an uninhabited photograph.")

(While the visualization of the meteor shower and drifting ice is weak, the contradictory scenery of the moon and the sun on the horizon is clearly expressed.)

Also, compared to other images generated earlier (see "List of Generated Images" below), I confirmed that the final image that passed verification satisfied more of the specified requirements.

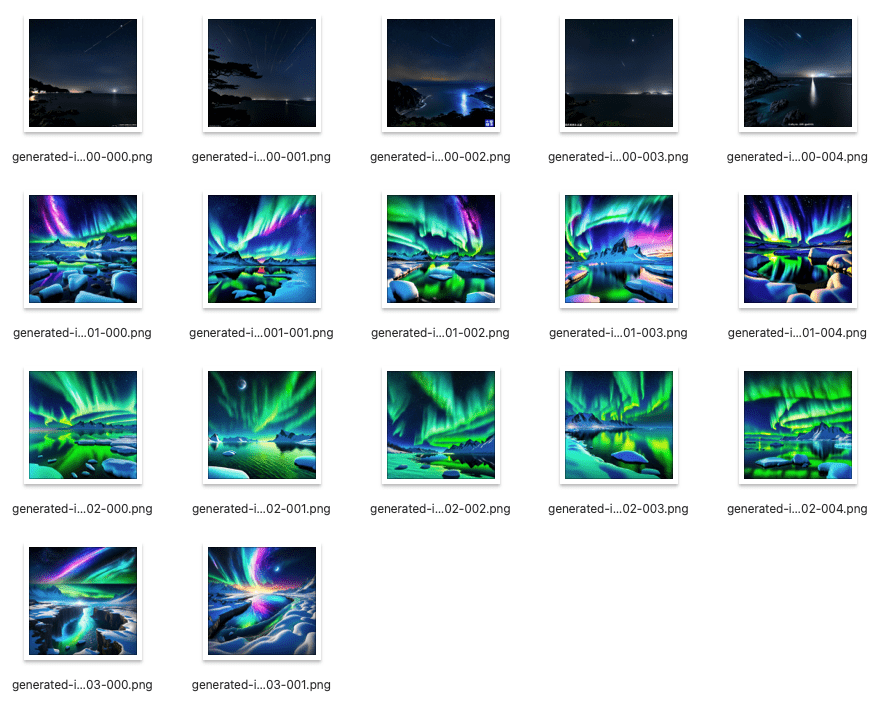

Here is a list of images generated during this trial run.

Each row of images in this "List of Generated Images" was generated from different modified prompts.

While the image output from the initial Japanese text prompt is far from the requirements, the images output from the first prompt modification onwards are closer to the requirements.

Changes in Modified Prompts

Each row of images in the "List of Generated Images" shown above was generated from different modified prompts.Specifically, the image in the first row of the "List of Generated Images" was generated from the "0th modification" prompt below, while the image in the last row was generated from the "3rd modification" prompt below.

Let's look at the content of the modified image generation prompts for each number of prompt modifications.

0th modification

自然の中から見た夜景で、空にはオーロラと月と流星群があり、地上には海が広がって流氷が流れ、地平線から太陽が出ている無人の写真。* The meaning is "A night view from nature, with aurora, moon, and meteor shower in the sky, the sea spreading on the ground with drifting ice, and the sun rising from the horizon in an uninhabited photograph."

1st modification

Breathtaking night landscape, vibrant aurora borealis (aurora:1.2) dancing across the starry sky, crescent moon illuminating the scene, meteor shower streaking through the atmosphere, vast arctic ocean with floating ice floes, midnight sun peeking over the horizon, ethereal glow, long exposure photography, ultra high resolution 8k, photorealistic, highly detailed, dramatic lighting, panoramic composition, inspired by Aurora Borealis paintings of Frederic Edwin Church2nd modification

Breathtaking Arctic night landscape, vibrant green aurora borealis dancing across the starry sky, full moon illuminating the scene, meteor shower streaking through the atmosphere, vast icy ocean with floating ice floes, midnight sun peeking over the horizon, ultra-high resolution 8K, photorealistic, highly detailed, dramatic lighting, panoramic composition, inspired by Albert Bierstadt and Frederic Edwin Church, (aurora borealis:1.3), (meteor shower:1.2), serene and majestic atmosphere3rd modification

Breathtaking nightscape from nature's perspective, vibrant aurora borealis (aurora:1.2) dancing across the starry sky, crescent moon illuminating the scene, meteor shower streaking through the atmosphere, vast ocean with floating ice floes, sun peeking over the horizon creating a golden glow, no human presence, photorealistic, highly detailed, 8k resolution, dramatic lighting, wide-angle composition, inspired by Thomas Kinkade and Aurora HDR style, serene and awe-inspiring moodParticularly when viewed in conjunction with the "List of Generated Images" mentioned above, it becomes apparent that the initial Japanese text prompt was not optimized for image generation, resulting in output images that deviate significantly from the requirements.

On the other hand, from the first revision onwards, Claude 3.5 Sonnet modified the prompt to be optimized for image generation, leading to output images that more closely align with the requirements.

In this way, the images changed with each prompt modification and generation execution, and ultimately, an image that met the prompt requirements passed verification.

References:

Tech Blog with curated related content

AWS Documentation(Amazon Bedrock)

Summary

In this article, I introduced an example of using Amazon Bedrock to validate images generated by Stability AI Stable Diffusion XL (SDXL) using the image recognition feature of Anthropic Claude 3.5 Sonnet, and regenerate them until they meet the requirements.From the results of this attempt, I found that the image recognition feature of the Claude 3.5 Sonnet can recognize not only OCR but also what is depicted in the image and what it expresses, and can be used to verify whether the image meets the requirements.

I also confirmed that the Claude 3.5 Sonnet can modify and optimize the content of the prompt itself.

Moreover, and most importantly, by automatically determining whether generated images meet requirements using these functions, I was able to significantly reduce the amount of human visual inspection work.

This image recognition feature and high text editing capability of the Claude 3.5 Sonnet can potentially be applied to processes that have been difficult to automate until now, including controlling other image generation AI models as in this example.

I will continue to watch for updates to AI models supported by Amazon Bedrock, including Anthropic Claude models, implementation methods, and combinations with other services.

Written by Hidekazu Konishi